So, this whole thing kicked off because I hit a wall with local storage - it just doesn’t grow with you forever, you know? Plus, putting all my eggs in the basket of other companies felt a bit risky with all the changing rules and government access stuff these days.

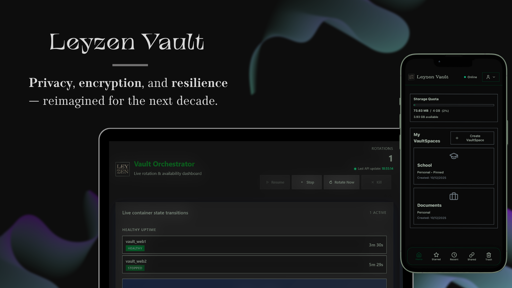

What I ended up with is pretty cool: a personal file vault where I’m in charge. It treats any outside storage like it can’t be trusted, and all the encryption happens right on my computer. I can even use cloud storage like S3 if I need to, but I never lose control of my own data.

Honestly, it just kinda grew on its own; I never set out to build a product. I’m mainly sharing it here to see how other folks deal with these kinds of choices.

You can check it out at https://www.leyzen.com/

Here’s a simple way to look at it: it’s all about persistence. If someone sneaks a backdoor onto a server or inside a container, that backdoor usually needs the environment to stay put.

But with containers that are always changing, that persistence gets cut off. We log the bad stuff, the old container gets shut down, and a brand new one pops up. Your service keeps running smoothly for folks, but whatever the attacker put there vanishes with the old container.

It’s not about saying hacks won’t ever happen but making it way tougher for those hacks to stick around for long :)

That is not an answer.

I’m not looking for a simple way to look at it. I want a technical breakdown of why rebuilding back end instances is valuable in a security context.

I’ll be blunt with you: your answers to this and others have been very surface-level and scant on technical details, which gives a strong impression that you don’t actually know how this thing works.

You are responsible for your output. If you want chatgpt or github ai tools to help you, that’s fine, but you still need to understand how the whole thing works.

You are making something “secure”, you need to be able to explain how that security works.

nobody here asked for technical details, so I didn’t respond with technical stuff. but now that you ask, I can respond:

the rebuild occurs periodically. you set the period (in seconds) in the .env. a container named orchestrator stops and rebuilds vault containers by deleting every file that is not in the database and therefore not encrypted (like payloads). for event-based triggers, I haven’t implemented specific ones yet, but I plan to.

session tokens are stored encrypted in the database, so when a vault container is rebuilt, sessions remain intact thanks to postgres.

same as 2: auth tokens are stored in the database and are never lost, even when the whole stack is rebuilt.

yes, but not everything. since one container (the orchestrator) needs access to the host’s docker socket, I don’t mount the socket directly. instead, I use a separate container with an allowlist to prevent the orchestrator from shutting down services like postgres. this container is authenticated with a token. I do rotate this token, and it is derived from a secret_key stored in the .env, regenerated each time using argon2id with random parameters. and i also use docker network to isolate containers that doesn’t need to communicate between each other, like vault containers and the “docker socket guardian” container.

every item has its own blob: one blob per file. for folders, I use a hierarchical tree in the database. each file has a parent id pointing to its folder, and files at the root have no parent id.

can the app tune storage requirements depending on S3 configuration? not yet, S3 integration is a new feature, but I’ve added your idea to my personal roadmap. thanks.

and I understand perfectly why you’re asking this. No hate at all, I like feedback like this because it helps me improve.

Thanks for the reply.